Virtual reality is set to be worth up to $7 billion by 2020. The web is definitely not going to remain an exclusively 2D environment during this time. In fact, there are already a few simple ways to bring VR into the browser. It is also incredibly fun to work with!

To begin your development adventure into the Virtual Web, there are three potential ways to do this:

- JavaScript, Three.js and Watching Device Orientation

- JavaScript, Three.js and WebVR (My new preferred method!)

- CSS and WebVR (still very early days)

I’ll go over each one and show a short summary of how each works.

JavaScript, Three.js and Watching Device Orientation

One of the ways that most browser based virtual reality projects work at the moment is via the deviceorientation browser event. This tells the browser how the device is oriented and allows the browser to pick up if it has been rotated or tilted. This functionality within a VR perspective allows you to detect when someone looks around and adjust the camera to follow their gaze.

To achieve a wonderful 3D scene within the browser, we use three.js, a JavaScript framework that makes it easy to create 3D shapes and scenes. It takes most of the complexity out of putting together a 3D experience and allows you to focus on what you are trying to put together within your scene.

I’ve written two demos here at SitePoint that use the Device Orientation method:

- Bringing VR to the Web with Google Cardboard and Three.js

- Visualizing a Twitter Stream in VR with Three.js and Node

If you are new to three.js and how to put together a scene, I’d recommend taking a look at the above two articles for a more in depth introduction into this method. I will cover key concepts here, however it’ll be at a higher level.

The key components of each of these involve the following JavaScript files (you can get these files from the example demos above and also will find them in the three.js examples download):

three.min.js– Our three.js frameworkDeviceOrientationControls.js– This is the three.js plugin that provides the Device Orientation we discussed above. It moves our camera to meet the movements of our device.OrbitControls.js– This is a backup controller that lets the user move the camera using the mouse instead if we don’t have a device that has access to the Device Orientation event.StereoEffect.js– A three.js effect that splits the screen into a stereoscopic image angled slightly differently for each eye just like in VR. This creates the actual VR split screen without us needing to do anything complicated.

Device Orientation

The code to enable Device Orientation controls looks like so:

function setOrientationControls(e) {

if (!e.alpha) {

return;

}

controls = new THREE.DeviceOrientationControls(camera, true);

controls.connect();

controls.update();

element.addEventListener('click', fullscreen, false);

window.removeEventListener('deviceorientation', setOrientationControls, true);

}

window.addEventListener('deviceorientation', setOrientationControls, true);

function fullscreen() {

if (container.requestFullscreen) {

container.requestFullscreen();

} else if (container.msRequestFullscreen) {

container.msRequestFullscreen();

} else if (container.mozRequestFullScreen) {

container.mozRequestFullScreen();

} else if (container.webkitRequestFullscreen) {

container.webkitRequestFullscreen();

}

}The DeviceOrientation event listener provides an alpha, beta and gamma value when it has a compatible device. If we don’t have any alpha value, it doesn’t change our controls to use Device Orientation so that we can use Orbit Controls instead.

If it does have this alpha value, then we create a Device Orientation control and provide it our camera variable to control. We also set it to set our scene to fullscreen if the user taps the screen (we don’t want to be staring at the browser’s address bar when in VR).

Orbit Controls

If that alpha value isn’t present and we don’t have access the device’s Device Orientation event, this technique instead provides a control to move the camera via dragging it around with the mouse. This looks like so:

controls = new THREE.OrbitControls(camera, element);

controls.target.set(

camera.position.x,

camera.position.y,

camera.position.z

);

controls.noPan = true;

controls.noZoom = true;The main things that might be confusing from the code above is the noPan and noZoom. Basically, we don’t want to move physically around the scene via the mouse and we don’t want to be able to zoom in or out – we only want to look around.

Stereo Effect

In order to use the stereo effect, we define it like so:

effect = new THREE.StereoEffect(renderer);Then on resize of the window, we update its size:

effect.setSize(width, height);Within each requestAnimationFrame we set the scene to render through our effect:

effect.render(scene, camera);That is the basics of how the Device Orientation style of achieving VR works. It can be effective for a nice and simple implementation with Google Cardboard, however it isn’t quite as effective on the Oculus Rift. The next approach is much better for the Rift.

JavaScript, Three.js and WebVR

Looking to access VR headset orientation like the Oculus Rift? WebVR is the way to do it at the moment. WebVR is an early and experimental Javascript API that provides access to the features of Virtual Reality devices like Oculus Rift and Google Cardboard. At the moment, it is available on Firefox Nightly and a few experimental builds of Mobile Chrome and Chromium. One thing to keep in mind is that it the spec is still in draft and is subject to change, so experiment with it but know that you may need to adjust things over time.

Overall, the WebVR API provides access to the VR device information via:

navigator.getVRDevicesI won’t go into lots of technical details here (I’ll cover this in more detail in its own future SitePoint article!), if you’re interested in finding out more check out the WebVR editor’s draft. The reason I won’t go into detail with it is that there is a much easier method to implement the API.

This aforementioned easier method to implement the WebVR API is to use the WebVR Boilerplate from Boris Smus. It provides a good level of baseline functionality that implements WebVR and gracefully degrades the experience for different devices. It is currently the nicest web VR implementation I’ve seen. If you are looking to build a VR experience for the web, this is currently the best place to start!

To start using this method, download the WebVR Boilerplate on Github.

You can focus on editing the index.html and using all of the files in that set up, or you can implement the specific plugins into your own project from scratch. If you’d like to compare the differences in each implementation, I’ve migrated my Visualizing a Twitter Stream in VR with Three.js and Node example from above into a WebVR powered Twitter Stream in VR.

To include this project into your own from scratch, the files you’ll want to have are:

three.min.js– Our three.js framework of courseVRControls.js– A three.js plugin for VR controls via WebVR (this can be found inbower_components/threejs/examples/js/controls/VRControls.jsin the Boilerplate project)VREffect.js– A three.js plugin for the VR effect itself that displays the scene for an Oculus Rift (this can be found inbower_components/threejs/examples/js/effects/VREffect.jsin the Boilerplate project)webvr-polyfill.js– This is a polyfill for browsers which don’t fully support WebVR just yet (this can be found on GitHub and also inbower_components/webvr-polyfill/build/webvr-polyfill.jsin the Boilerplate code)webvr-manager.js– This is part of the Boilerplate code which manages everything for you, including providing a way to enter and exit VR mode (this can be found inbuild/webvr-manager.js)

Implementing it requires only a few adjustments from the Device Orientation method. Here’s an overview for those looking to try this method:

Controls

The VR controls are quite simple to set up. We can just assign a new VRControls object to the controls variable we used earlier. The orbit controls and device orientation controls should not be necessary as the Boilerplate should now take care of browsers without VR capabilities. This means your scene should still work quite well on Google Cardboard too!

controls = new THREE.VRControls(camera);VR Effect

The effect is very similar to implement as the StereoEffect was. Just replace that effect with our new VREffect one:

effect = new THREE.VREffect(renderer);

effect.setSize(window.innerWidth, window.innerHeight);However, we do not render through that effect in this method. Instead, we render through our VR manager.

VR Manager

The VR manager takes care of all our VR entering/exiting and so forth, so this is where our scene is finally rendered. We initially set it up via the following:

manager = new WebVRManager(renderer, effect, {hideButton: false});The VR manager provides a button which lets the user enter VR mode if they are on a compatible browser, or full screen if their browser isn’t capable of VR (full screen is what we want for mobile). The hideButton parameter says whether we want to hide that button or not. We definitely do not want to hide it!

Our render call then looks like so, it uses a timestamp variable that comes from our three.js’ update() function:

function update(timestamp) {

controls.update();

manager.render(scene, camera, timestamp);

}With all of that in place, you should have a working VR implementation that translates itself into various formats depending on the device.

Is renderer.getSize() returning an error? This drove me mad for a few hours but all you’ll need to do to fix this is – update three.js!

What the WebVR Boilerplate Looks Like In Action

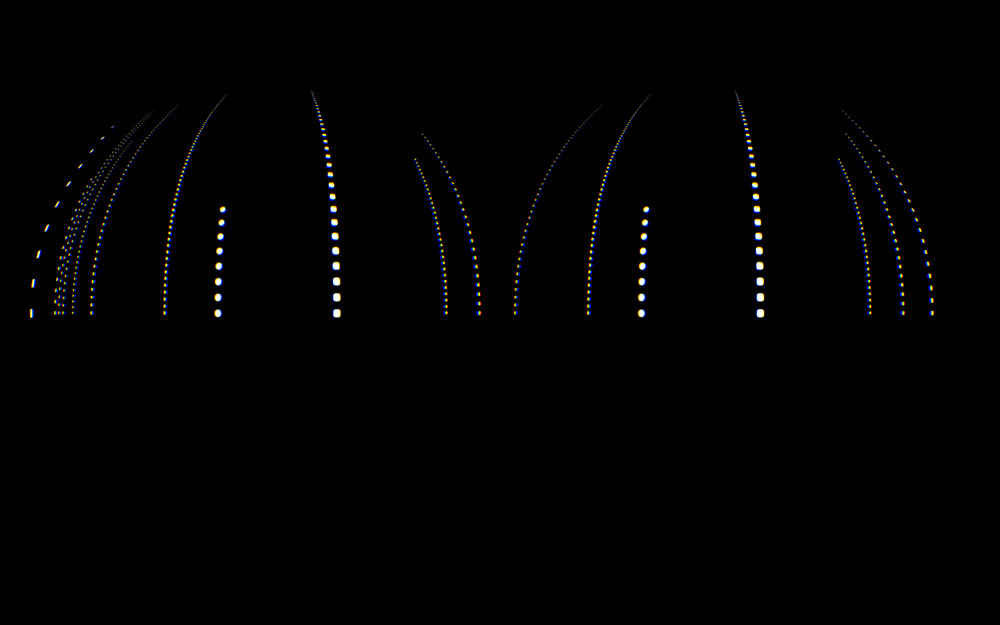

Here is what the view of my Twitter example looks like on a browser which supports VR:

Here is it within the Oculus Rift view that appears when you click the VR icon:

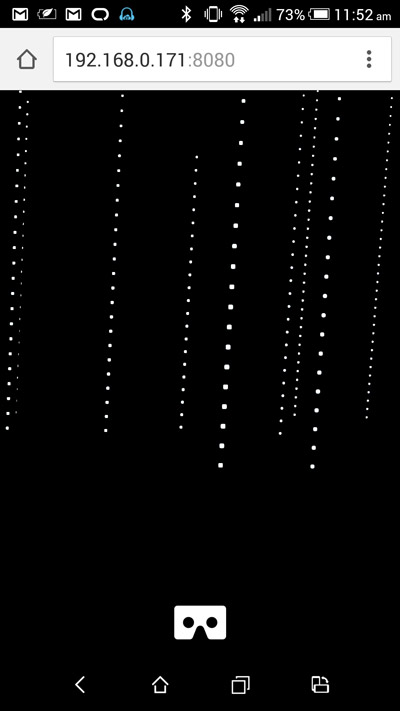

This is what the view looks like on a smartphone, device orientation still allows us to look around the scene and we don’t have a split screen. A great responsive view of the content:

If we click the VR icon on mobile, we get a fullscreen view for Google Cardboard style devices:

CSS and WebVR

Mozilla is aiming to bring VR viewing capabilities to its browser in the Firefox Nightly versions too, however it is quite early days! I haven’t had much luck getting it to work on my Mac and Oculus set up. The sorts of conventions that Vladimir Vukićević from Firefox has mentioned includes integrating CSS 3D transforms with VR fullscreen mode.

As an example from Vladimir’s blog post, he says that elements with transform-style: preserve-3d should render twice from the two viewpoints to bring it into VR:

#css-square {

position: absolute;

top: 0; left: 0;

transform-style: preserve-3d;

transform: translate(100px, 100px, 100px);

width: 250px;

height: 250px;

background: blue;

}If you know of any demos using VR and CSS, please do let me know! I haven’t been able to track any down. The idea of bringing the HTML and CSS part of the web into VR is without a doubt a really intriguing concept. It’s inevitable that the web will enter the VR realm like this at some point as we all slowly shift to VR devices!

Conclusion

The world of technology is slowly but surely going to embrace Virtual Reality in the coming years as our technological capabilities match up with our wild aspirations! The one thing that will drive the VR adoption and its value is content. We need to get VR content out there for VR users to enjoy! What better and easier way is there than via the web?

If you take the plunge and build a VR demo using this code, please share it in the comments or get in touch with me on Twitter (@thatpatrickguy). I’d love to put on my Oculus Rift or Google Cardboard and take it for a spin!

I’ve got a set of curated links on VR and AR via JavaScript for those looking for a quick reference. Head over to Dev Diner and check out my Dev Diner VR and AR with JavaScript Developer Guide, full of both links mentioned in this article, other great SitePoint articles and more. If you’ve got other great resources I don’t have listed – please let me know too!

Frequently Asked Questions about Building VR on the Web

What are the prerequisites for building VR on the web?

To start building VR on the web, you need to have a basic understanding of HTML, CSS, and JavaScript. You should also be familiar with WebGL, a JavaScript API for rendering 2D and 3D graphics. Knowledge of Three.js, a popular JavaScript library for creating 3D graphics, is also beneficial. Additionally, you should have a VR headset for testing your VR experiences.

How can I create VR experiences using JavaScript?

JavaScript, along with libraries like Three.js and A-Frame, can be used to create VR experiences. Three.js simplifies the process of working with WebGL, while A-Frame allows you to build VR scenes using HTML-like syntax. You can create 3D objects, add textures and lighting, and control camera movement using these tools.

What is WebVR and how does it relate to VR on the web?

WebVR is a JavaScript API that provides support for various virtual reality devices, such as the Oculus Rift, HTC Vive, and Google Cardboard, in a web browser. It allows developers to create immersive VR experiences on the web that are accessible to anyone with a VR device and a compatible browser.

Can I use other programming languages besides JavaScript to build VR on the web?

JavaScript is the primary language for web development, including VR. However, you can use languages that compile to JavaScript, such as TypeScript or CoffeeScript. Additionally, WebAssembly allows you to run code written in languages like C++ at near-native speed in the browser.

How can I optimize my VR experiences for the web?

Optimizing VR experiences for the web involves reducing latency, managing memory efficiently, and optimizing rendering. You can use techniques such as asynchronous loading, texture compression, and level of detail (LOD) to improve performance. Also, consider the limitations of the user’s hardware and network conditions.

How can I make my VR experiences accessible to users without VR devices?

You can create fallbacks for users without VR devices. For example, you can use the DeviceOrientation API to allow users to explore the VR scene by moving their mobile device. You can also provide a 360-degree view that users can navigate with their mouse or touch.

How can I test my VR experiences on the web?

You can test your VR experiences using a VR headset. If you don’t have a headset, you can use the WebVR emulator extension for Chrome and Firefox. This tool simulates the WebVR API and provides a 3D view of the VR scene.

How can I export my VR creations from Three.js to A-Frame?

You can use the glTF exporter in Three.js to export your 3D models in a format that A-Frame can read. glTF (GL Transmission Format) is a standard file format for 3D scenes and models.

What are some challenges I might face when building VR on the web?

Some challenges include handling user input in a 3D environment, optimizing performance, and ensuring compatibility across different VR devices and browsers. Additionally, creating realistic 3D graphics and animations can be complex.

Where can I learn more about building VR on the web?

There are many resources available online. Mozilla’s WebVR documentation is a great starting point. You can also check out tutorials and examples on the Three.js and A-Frame websites. Additionally, there are numerous online communities where you can ask questions and share your work.

Patrick Catanzariti

Patrick CatanzaritiPatCat is the founder of Dev Diner, a site that explores developing for emerging tech such as virtual and augmented reality, the Internet of Things, artificial intelligence and wearables. He is a SitePoint contributing editor for emerging tech, an instructor at SitePoint Premium and O'Reilly, a Meta Pioneer and freelance developer who loves every opportunity to tinker with something new in a tech demo.